Chaos is where the value is

We want to understand the systems we live within, and we want them to make sense.

But over time all systems tend towards chaos, becoming less understandable and less legible.

Drew Austin describes the sensation of the internet trending towards chaos

Amazon search results are increasingly clogged with sponsored items and SEO word salad, a trash pile through which users must sift to find what they actually want. Google search results have followed a similar trajectory. Most social networks, meanwhile, seem to be barely suppressing a tsunami of bots and spam at any given moment, while simultaneously encouraging the users themselves to post like bots (think of every Instagram caption that’s jammed full of hashtags).

People don’t want to go to Amazon anymore because it’s cluttered with trash. But Amazon has also tended towards trash because it’s what increases revenue. Revenue isn’t a measure of usability, but it is a measure of economic value. That economic value is increasing despite the experience of shopping obviously becoming more chaotic and unpleasant.

As Drew points out, part of the appeal of AI is that is hides the chaos and “enshittification” of the internet, brushing the mess underneath the rug.

AI promises to help clean the mess up, by removing humans from various steps of the process, streamlining other steps, and making today’s crude hacks unnecessary. When more of the internet consists of computers talking directly to one another, whatever SEO still occurs will happen in the background; AI-generated objects will themselves be optimized, with less need for descriptive text—a condition foreshadowed by TikTok’s UX. The result of all this may still be ugly, but the reasons won’t be as obvious. It will likely feel less “messy.”

Our understanding of how AI systems work is limited, and they’re largely out of our control. For some folks that’s a deal breaker, and one that they shout from the rooftops. For others, that’s the entire value proposition.

Online shopping isn’t the only thing that has been enshittified—software development is also a victim. We’ve come from from the pure land of writing C code on machines that you can understand from top to bottom in the 80’s, to gigabytes of node_modules and an ever-expanding and unknowable pile of dependencies and layers of abstraction.

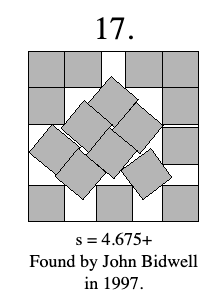

The discontent with chaos is also embodied by the 17 squares in a larger square meme. It turns out the mathematically optimal way to pack 17 squares within a larger square is…more chaotic than you’d expect. That triggers revulsion for some—the desire for control over chaos coming from a sense of aesthetics.

But this sense of aesthetics is also abstract. Squares don’t exist like this in nature. It’s something we made up. Humans invented abstraction to escape chaos.

We found the divine in mathematics and abstraction, but as soon as we did we cursed ourselves. The divine is recursive, and inevitably tends back towards nature. We thought we had escaped nature with the creation of concept, but nature comes creeping back in, like garden weeds that just won’t quit. And every time we cut back the weeds they come back much stronger, and more resistant to the pesticides we’ve tried clearing them out with previously.

In Conway’s Game of Life we identify patterns, and construct little critters within chaos. In Conway’s game of life these have names like “blinker”, “toad”, and “beacon”.

But in many cases Conway’s Game of Life either explodes into chaos or dies down into desolation.

We can always build fresh new systems to satisfy our desire for control. And they’ll be beautiful, and meaningful, and people will love them. But the most successful of these will grow. And with that growth comes change, additional variables, and then inevitably, chaos.

Small systems are too precious to be broadly valuable, and chaotic systems repulse us, so we’ll begin the cycle anew.

The risk is that we don’t acknowledge that this is what’s happening, and strive towards some large and valuable system without the aesthetic drawbacks of chaos by tucking them under the covers, as we are with AI.

Because the risk with tucking chaos under the covers is that you don’t know whether you’ve seeded the system, like Conways Game of Life, with an abstraction that resolves to desolation rather than the wonderful, lively, unintelligible, and valuable sprawl of chaos.

Member discussion